When Schuppanzigh’s quartet first played Beethoven’s first Razumovsky Quartet (opus 59.1), they laughed and were convinced the composer was playing a trick on them. “Surely, you do not consider this music?” asked the bemused violinist Felix Radicati. “Not for you,” replied Beethoven, “but for a later age.”

When being asked at congresses and medical meetings what I consider as the fundamental changes in contemporary cardiology compared to those of the previous decade, I used to answer: treating arrhythmias with ablation and devices rather than with drugs; doing exactly the opposite for stable coronary artery disease; and implementing genetics in clinical practice.

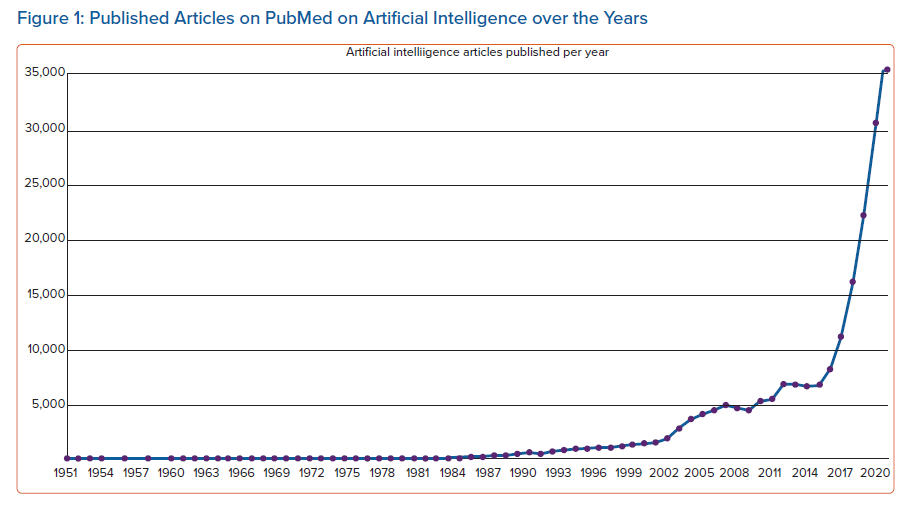

In the last few years, I feel obliged to add another important development: artificial intelligence. The reason is explained by Figure 1. A search in PubMed for articles published in 2020 about artificial intelligence produced 110,855 results, a search for the following year generated 139,304 and, in December 2022, 187,050 were found. And as Vaclav Smil has shown, numbers don’t lie.1–3 Indeed, they do not – but they do occasionally play dice. Michael Stifel, a German mathematician and friend of Martin Luther, decoded the name Pope Leo X to discover that it represented 666.

Will AI-based programs and machines become dominant in clinical medicine? This concept is certainly gaining pace in certain disciplines, with robotic surgery and electroanatomical mapping being more and more helpful, if not indispensable, in the interventionalist’s hands. Well, the crucial question is: what about the thinking doctor’s mind?

Doing a literature search mainly of editorials and scholarly reviews on the issue, I was rather impressed by the prevailing conviction that its use in clinical practice was inevitable. Digital technology, virtual hospitals and digital doctors are terms appearing in the first issue of European Heart Journal in 2023.4,5 Naturally, the younger the doctor, the more optimistic their views on the implementation of this revolution, and in the world of basic scientists (non-clinical scientists, i.e. molecular biologists, theoretical physicists) and computer scientists, such beliefs are even stronger and more established.

You see, sometimes we cannot hide our true identity despite our desperate need for disguise. Marquis de Condorcet, wholly a man of the Enlightenment, a champion of free speech and a staunch supporter of the French Revolution, fiercely criticised the draft of the 1793 French constitution. Robespierre’s Committee of Public Safety, truly respecting the tradition that revolutions devour their own children like Cronus, issued a warrant for his arrest. Condorcet fled Paris and, one evening, in torn clothes and feeling famished, entered an inn and ordered an omelette.

“How many eggs do you want for your omelette?” asked the innkeeper. “Twelve,” Condorcet replied. The innkeeper intuitively realised that only an aristocrat would have requested 12 eggs for an omelette, and Condorcet was promptly arrested to die in prison. As Kant reminds us, we see things not as they are but as we are.

Is artificial intelligence simply not intelligence, since machines cannot learn a model of the world the same way the human neocortex with its 150,000 columns does, as Jeff Hawkins claimed in his new theory of intelligence?6 How can we expect AI to replace the human brain when we do not actually know how exactly the human brain works?

To take the argument further, if AI is not intelligent, it is also not artificial in the true sense of the word. We are dealing with real computer programs based on scientific principles of digitisation and processing.

There are plenty of wrong connotations and unsubstantiated beliefs that stand the test of time by collective self-submission. Take paradise, for example, which is heaven according to most religions. Paradise was simply a Greek paraphrase (paradeisos) of the old Iranian parādaiĵah, meaning walled enclosure, and denoting the large, walled parks established near capital cities that served the Assyrian and Persian kings for hunting lions as a display of power and domination. It is interesting to consider that billions of pious people pray to go to a place initially used for hunting or being hunted by lions.7

It is the same with our brains and human intelligence. We talk about the brain and compare it to computer programs and algorithms without having really understood its very function. Nothing short of exhilarating with our brain, in Richard Dawkins’ words.

It is through this super complex and virtually unexplored organ, the human brain, that doctors experience intuition and intervene, and offer an individualistic approach to each patient. Let’s not forget that, despite what Freud claimed, the unconscious mind’s capacity is far more wide ranging and superior to the capacity of the conscious mind. This is why, according to George Sakkal, Paul Cézanne was most probably right about his theory of art, whereas Marcel Duchamp, with his post-modern urinal, was not.

Neuroscientific studies have revealed that the unconscious mind is also cognitive.8 It monitors, controls, decides and guides the way we determine behaviour and even rational thinking. This, for a doctor, is translated into experience, intuition or post-traumatic stress following interventions. Medicine may not be an art – at least in the way Paul Gaugin and Oscar Wilde saw art as the most intense form of individualism and, as Baudelaire dubbed, prostitution – but still not yet a strictly scientific process that can be run in a computer program.

The sceptic might inevitably think of AI as just another fashionable habit spreading among humans who traditionally get collectively overexcited and coerced, hide-bound by trendy, prevailing concepts that they wholeheartedly accept and transform into viral entities advertised in science, the lay press and the entertainment industry.

Well, if liberty means anything at all it means the right to tell people what they do not want to hear, in George Orwell’s immortal words, although Hubert Humphrey was also right in claiming that the right to be heard does not automatically include the right to be taken seriously. Is, therefore, all this tremendous excitement about AI ‘factfulness’ in the way the Roslings defined it, ‘enlightenment now’ or just wishful thinking, as computer scientist and inventor Erik Larson insisted in his recent Myth of Artificial Intelligence?9–11

“You older folks are retrogressive, refusing to follow developments or welcome potential progress” is something I expect to hear. In 1536, William Tyndale was burned alive for being the first to translate the Bible into anything close to modern English. In 1994, the British Library paid more than £1 million for one of Tyndale’s original copies, which it called “the most important book in the English language”.

Francisco Mojica, the inventor of CRISPR, had been trying for 3 years to publish his paper even in a journal with a relatively low impact factor, and eventually, Doudna and Zhang were suing each other for the Nobel.12 After all, Mark Twain was right: “The man with a new idea is a crank until the idea succeeds.”

Perhaps, therefore, remembering John Maynard Keynes, it is better to be approximately right than precisely wrong, and the most reasonable answer is “we don’t know yet”.

According to the first law of palaeontology, all species go extinct after a period, and mammals have an average species lifespan from origination to extinction of about 1 million years. The first species of human, Homo habilis, evolved approximately 2.3 million years ago, but H sapiens evolved roughly 200,000 years ago.13 Who knows what will happen in the next 800,000 years?

And we are not talking about progress only in science and technology and quantum computing, which should allow incredible volumes of data handling. We are also talking about the human brain itself that continually changes – as a result of evolution according to Darwin or intelligent design according to the Grand Inquisitor Tomás de Torquemada and the Discovery Institute.

There are 86 billion neurons (8.6 × 1010) in the human brain, each with possibly tens of thousands of synaptic connections that are modified and change following even the simplest intellectual activity. The response of the public to Beethoven’s third symphony or the Rasumovsky and later quartets is probably indicative of the ever-evolving nature of the collective human intellect.14,15

Demosthenes G Katritsis

Editor-in-Chief, Arrhythmia & Electrophysiology Review

Hygeia Hospital, Athens, Greece